Scaling Terraform agents using the Terraform Operator on Amazon EKS Auto Mode

Learn how to deploy and autoscale HCP Terraform agents using the Terraform Operator on EKS Auto Mode, enabling efficient resource utilization and automated capacity management for platform teams.

HashiCorp Cloud Platform (HCP) Terraform provides organizations with a managed service for infrastructure automation, offering multiple execution mode options to suit different requirements. While many teams can effectively use HCP Terraform with standard remote execution workflows, certain scenarios, such as managing resources within private networks, call for HCP Terraform Agents.

HCP Terraform Agents are self-hosted execution environments that run Terraform operations locally while maintaining seamless integration with the HCP Terraform service. They provide flexibility for organizations that need to maintain control over the execution environment while still benefiting from HCP Terraform’s collaborative features and workflow management.

As organizations that utilize agents scale their infrastructure deployments, managing these HCP Terraform Agents becomes increasingly complex. Platform teams face the challenging task of striking a balance between ensuring sufficient agent capacity for peak workloads and avoiding resource waste during quiet periods. Manual scaling processes are time-consuming and error-prone, often resulting in either costly over-provisioning or performance-limiting under-provisioning. This problem intensifies in environments where multiple teams share Terraform resources, resulting in unpredictable demand fluctuations. In this blog post, we’ll demonstrate how combining the HCP Terraform Operator with Amazon EKS Auto Mode creates an intelligent, self-adjusting agent scaling solution that responds dynamically to workload demands, eliminating manual intervention while optimizing both performance and cost.

The HCP Terraform Operator advantage for agent scaling

The HCP Terraform Operator for Kubernetes simplifies deploying and managing Terraform agents by handling their lifecycle through Kubernetes custom resources. While the basic deployment of agents is straightforward, the operator’s autoscaling capability is particularly valuable for platform teams.

The most significant advantage is the operator’s ability to automatically scale the number of agents based on pending Terraform workloads. This ensures:

Sufficient capacity during high-demand periods

Resource conservation during low utilization

Elimination of manual scaling operations

Without autoscaling, platform teams face challenges:

Over-provisioning agents “just in case,” wasting resources

Under-provisioning agents leading to execution delays

Manual intervention to adjust capacity as needs change

Why EKS Auto Mode with HCP Terraform Operator to manage Terraform agents

EKS Auto Mode extends AWS management beyond the Kubernetes control plane to include the underlying infrastructure that enables the smooth operation of your workloads. For platform teams managing Terraform deployments, this brings several important advantages:

Streamlined operations: EKS Auto Mode automates cluster node provisioning, scaling, security patching, and upgrades. This reduces the operational burden on platform teams who need to focus on delivering infrastructure as a service rather than maintaining Kubernetes clusters.

Dynamic scalability with Karpenter: EKS Auto Mode leverages Karpenter for intelligent node scaling, which is ideal for Terraform agent workloads. Unlike traditional cluster autoscaling solutions, Karpenter observes unschedulable pods and makes scheduling decisions based on actual workload requirements. When Terraform runs spike, Karpenter launches new nodes to run the new Terraform agent pods. Similarly, when there is a decrease in runs, Karpenter disrupts and terminates nodes when they are no longer needed, optimizing resource usage.

Enhanced security: EKS Auto Mode uses immutable AMIs with read-only root filesystems and SELinux mandatory access controls. Nodes are automatically replaced within a maximum 21-day lifecycle (which you can reduce).

Cost optimization: EKS Auto Mode consolidates workloads onto fewer nodes when possible and terminates unused instances, helping control costs for your agent infrastructure.

Unlike EKS with Fargate, EKS Auto Mode is fully Kubernetes conformant and supports all EC2 runtime purchase options, including Spot instances. This gives you flexibility to optimize your Terraform agent infrastructure based on your specific workload characteristics and cost requirements.

Let’s examine how to implement autoscaling with the Terraform Operator on EKS Auto Mode.

Deploying the HCP Terraform Operator on EKS Auto Mode

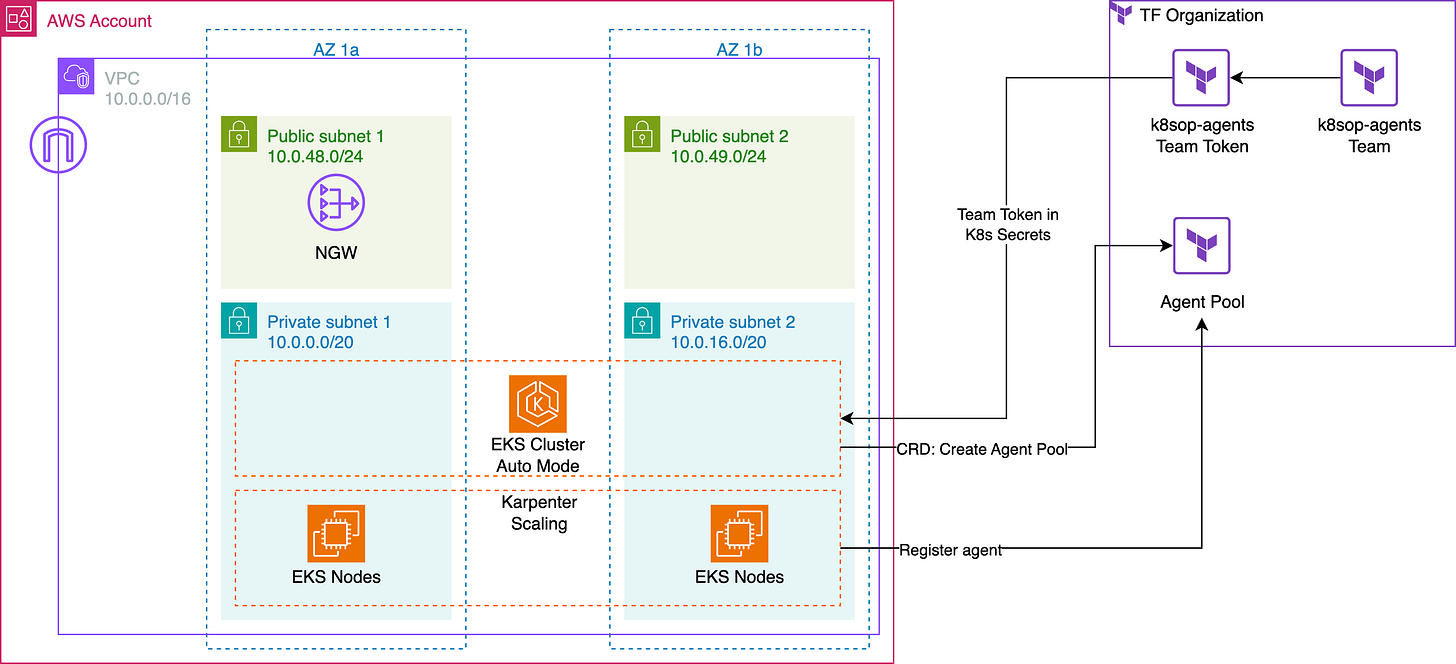

To demonstrate this deployment pattern, I’ve created a GitHub repository with complete Terraform configurations that you can use to follow along. The repository contains modular configurations for each component of the solution. The solution deployed is shown in the architecture diagram. Note that this code is for demonstration purposes only and should not be used in production environments.

Create an EKS Auto Mode cluster using Terraform.

The code for this section is available here. Follow the instructions in the link to configure your AWS credentials and apply the Terraform configuration. Note that running this demo will incur cloud service costs. Ensure you understand the pricing implications before proceeding.

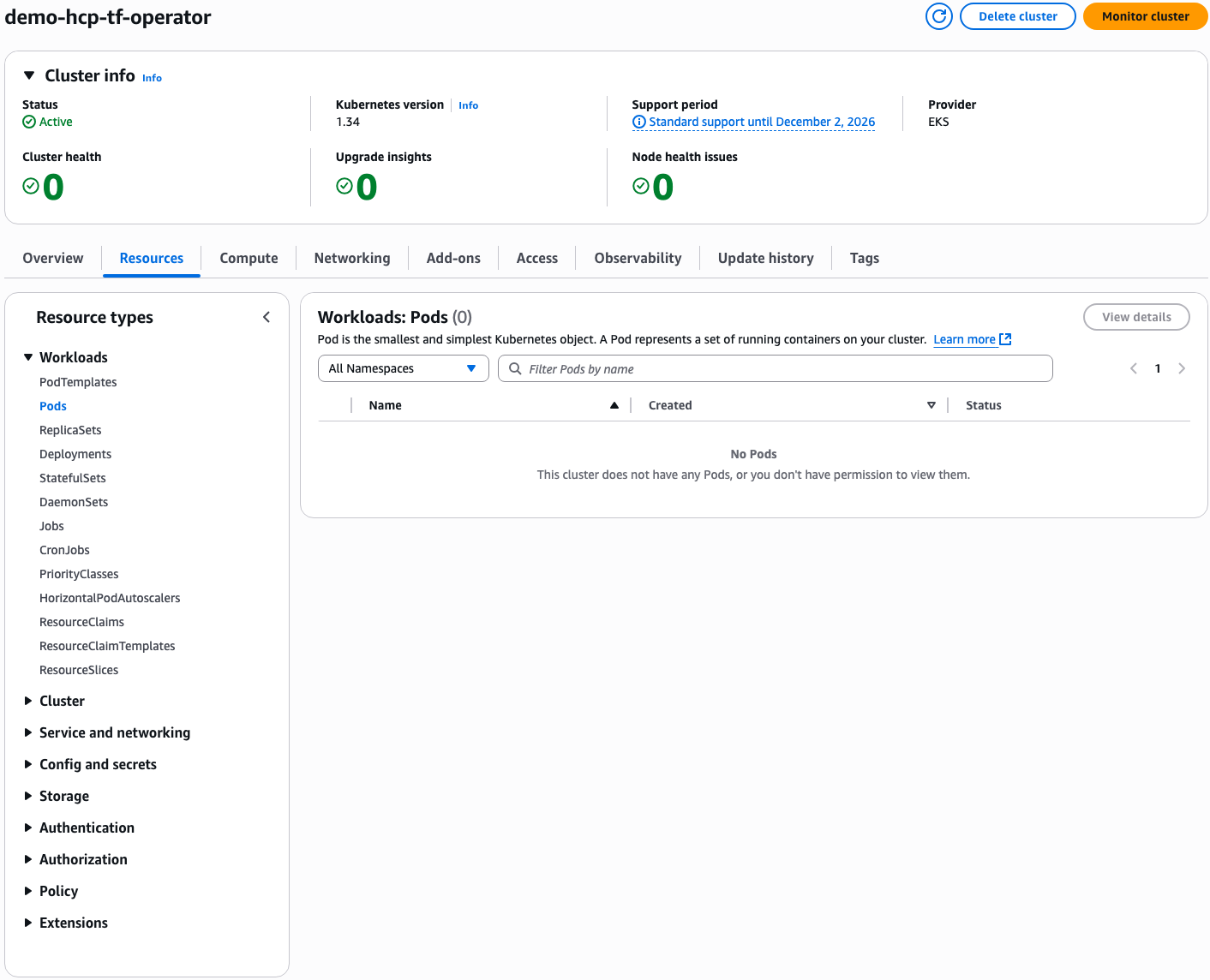

When the EKS cluster is first created, no pods are running.

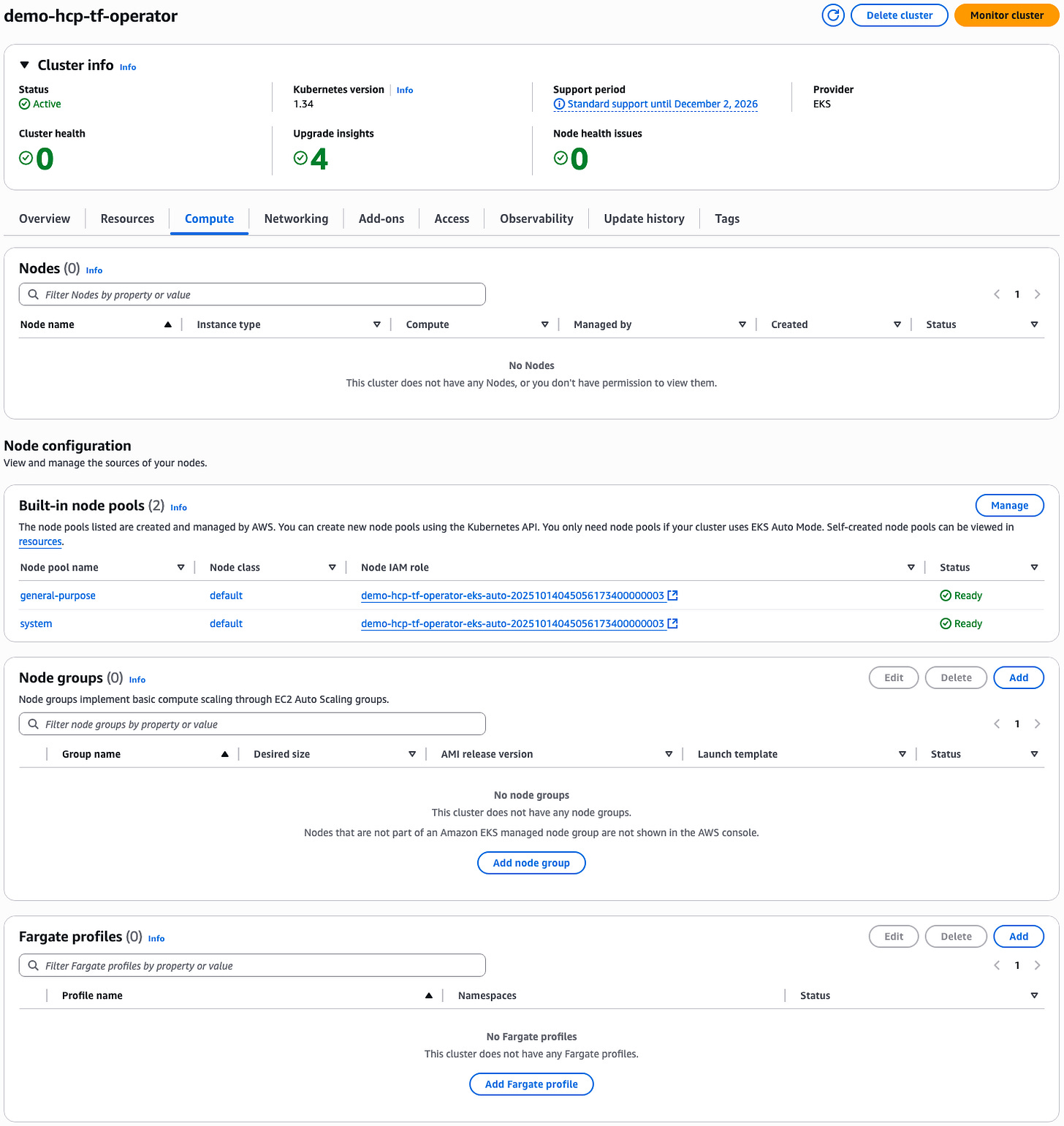

Since there are no deployments or unscheduled pods, no compute nodes are provisioned.

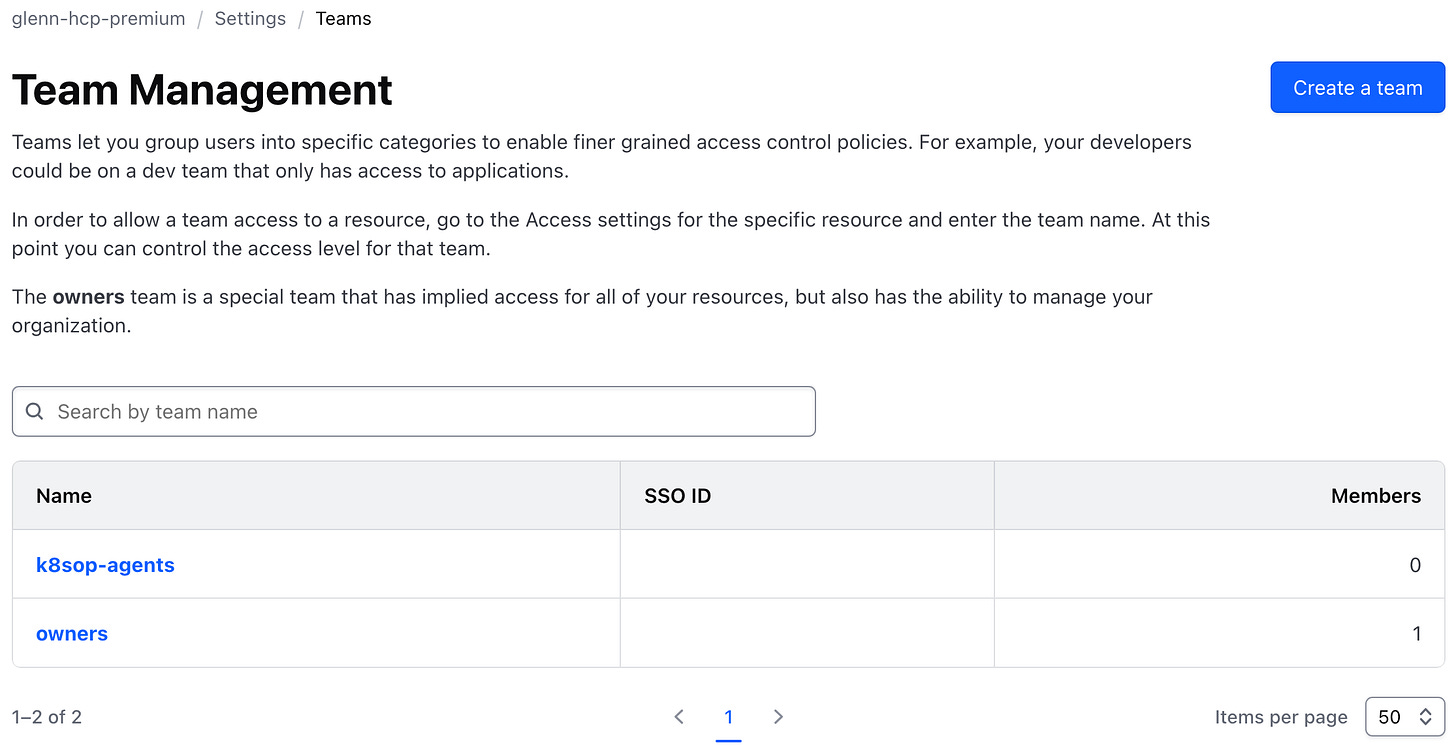

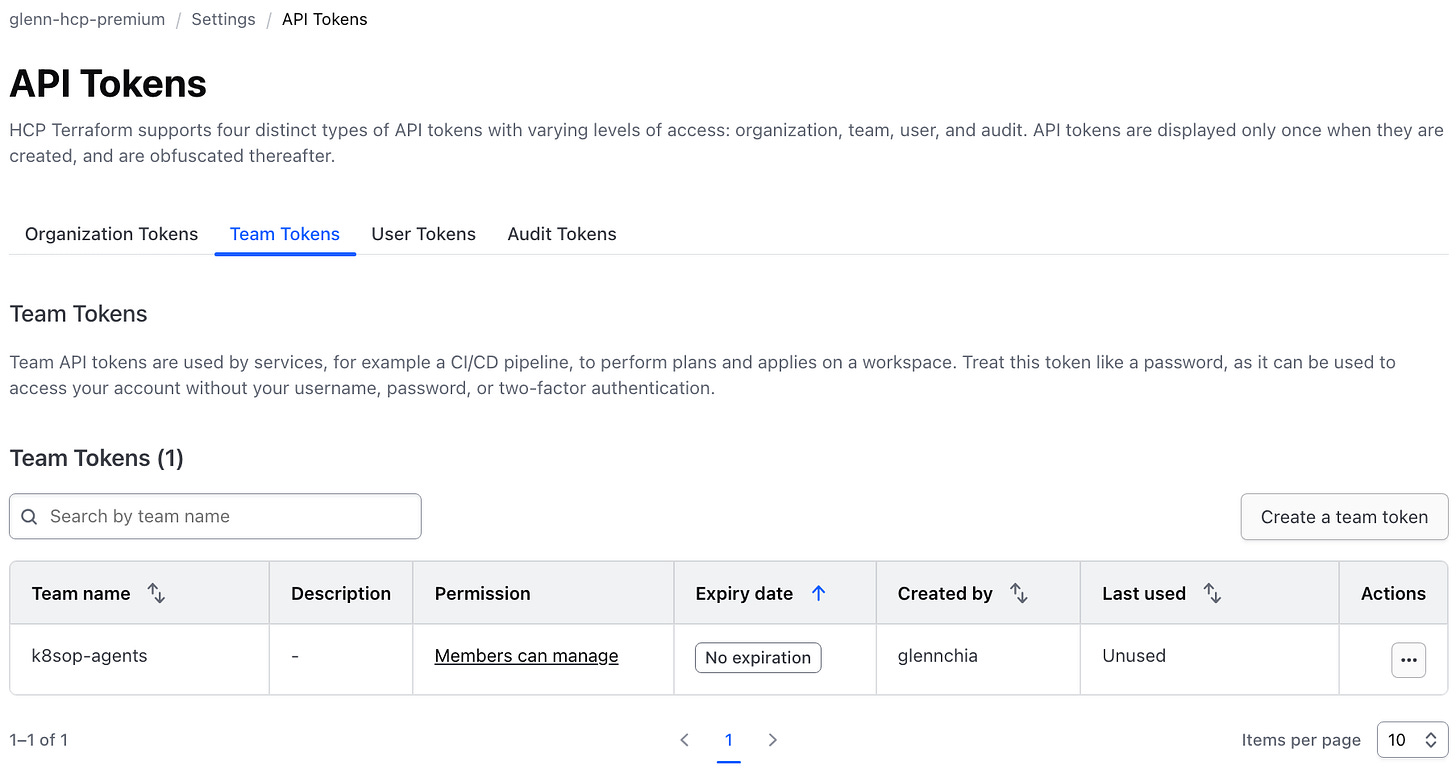

Create HCP Terraform Team and Token

This section follows the guidance in the HashiCorp Terraform Operator v2 Agent Pool tutorial. The code for this section is available here. Follow the instructions in the linked code to configure your HCP credentials and apply the Terraform configuration.

This creates a Terraform team named k8sop-agents with permissions to manage agent pools and read workspaces. These permissions are necessary because the Agent Pool Custom Resource Definition (CRD) requires the ability to create agent pools and detect pending runs in Terraform workspaces to effectively scale agents within the pool.

A team token is also created.

Create the Terraform Operator

The code for this section is available here. Follow the instructions in the link to create the operator within the EKS Auto Mode cluster.

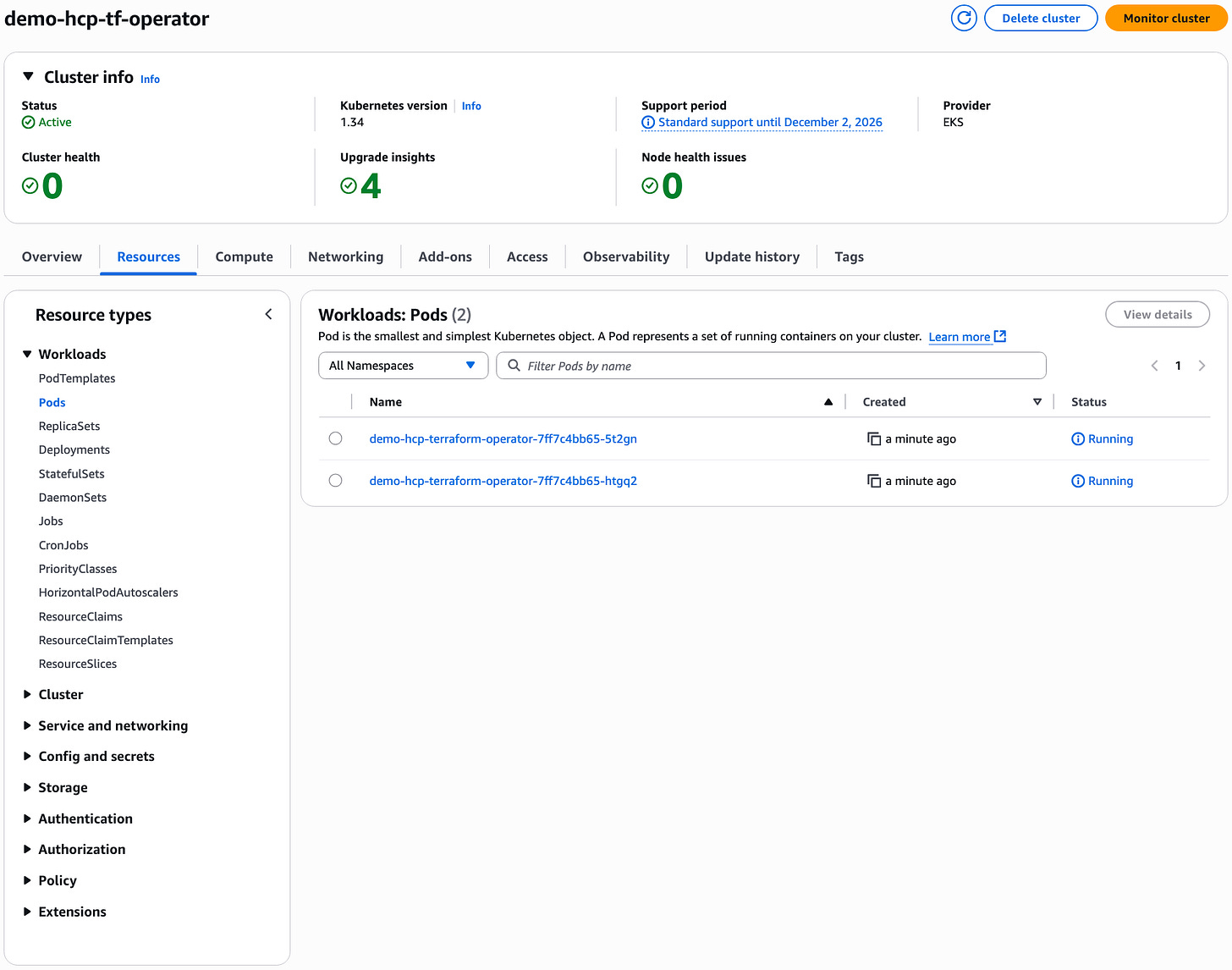

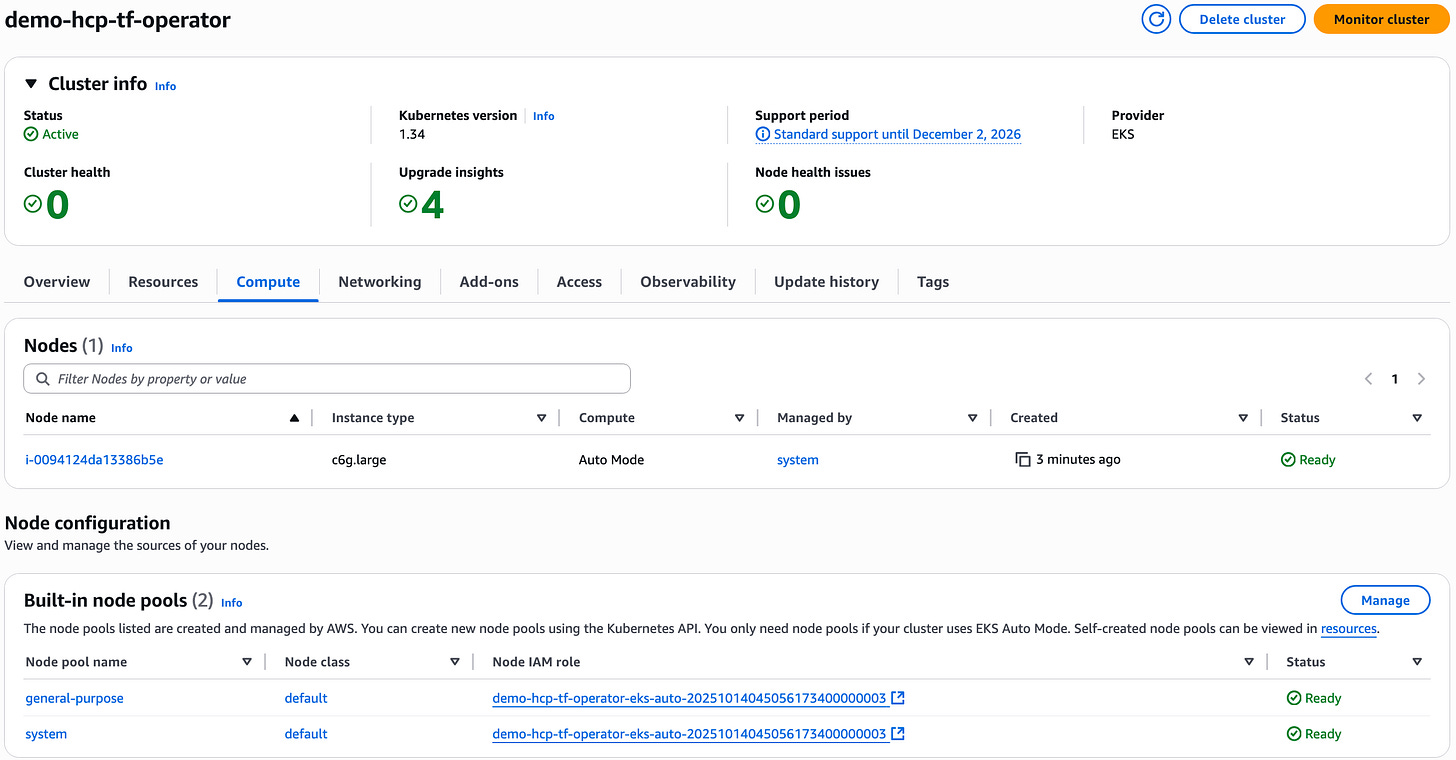

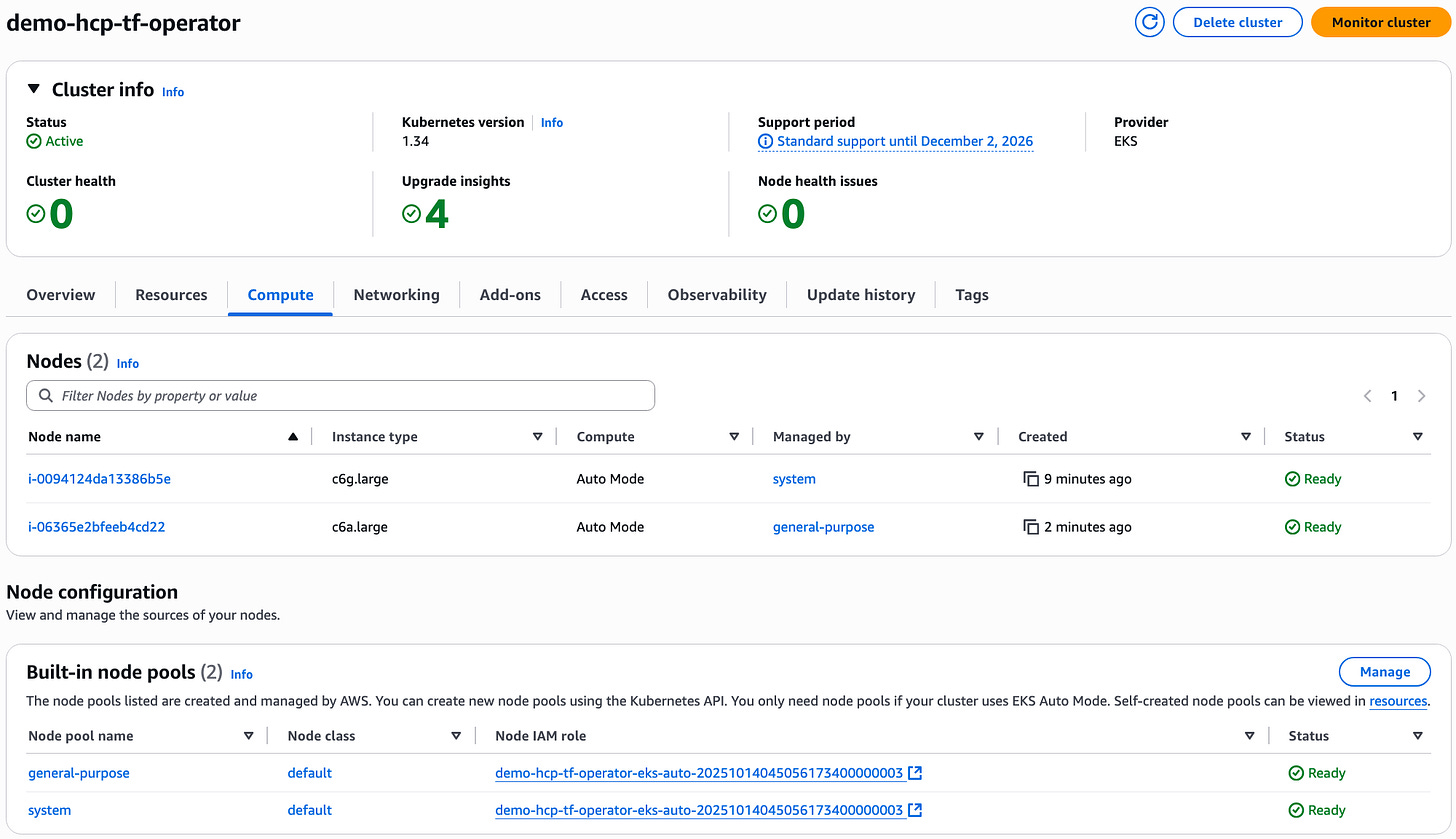

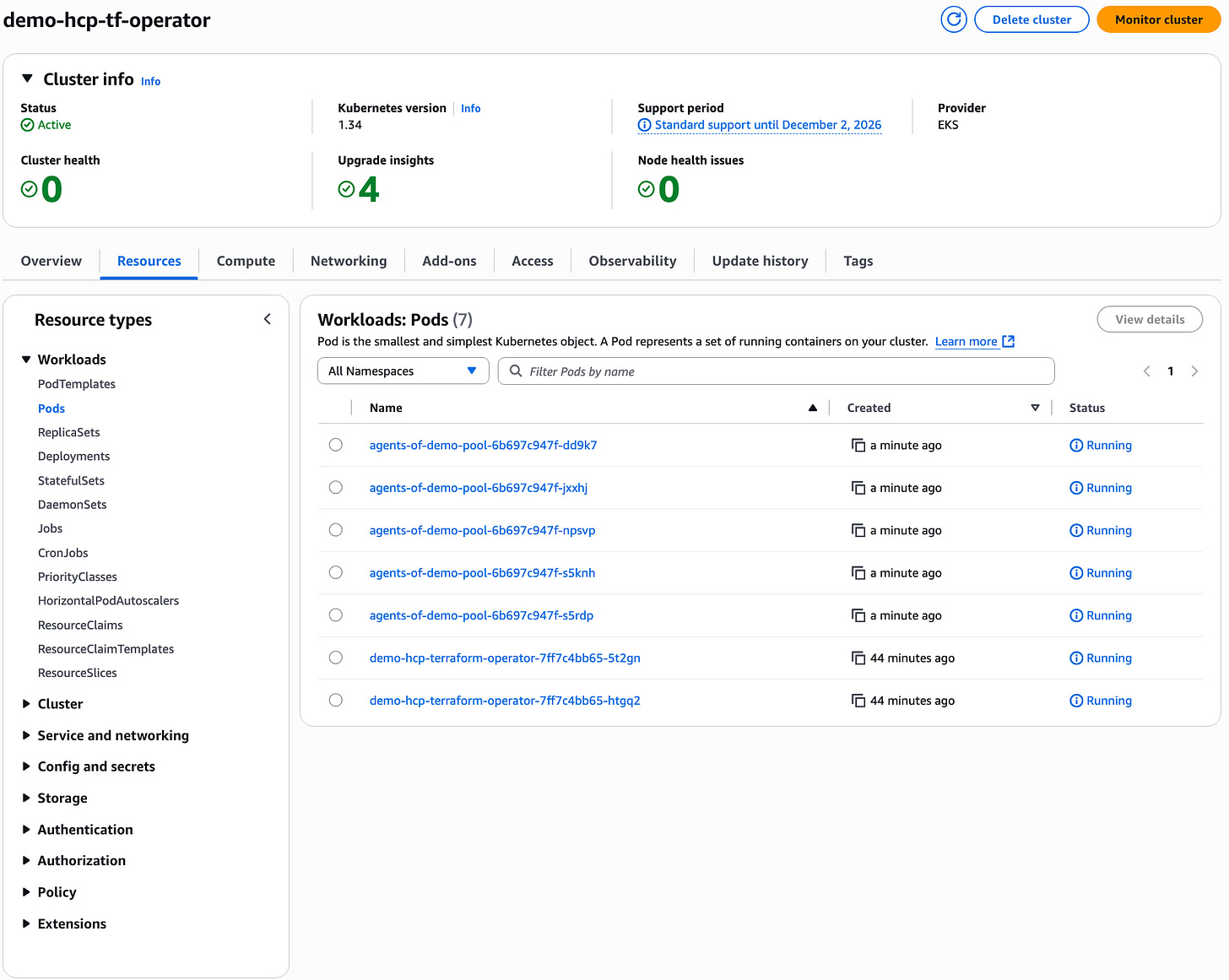

The EKS Cluster now has 2 Terraform Operator pods running.

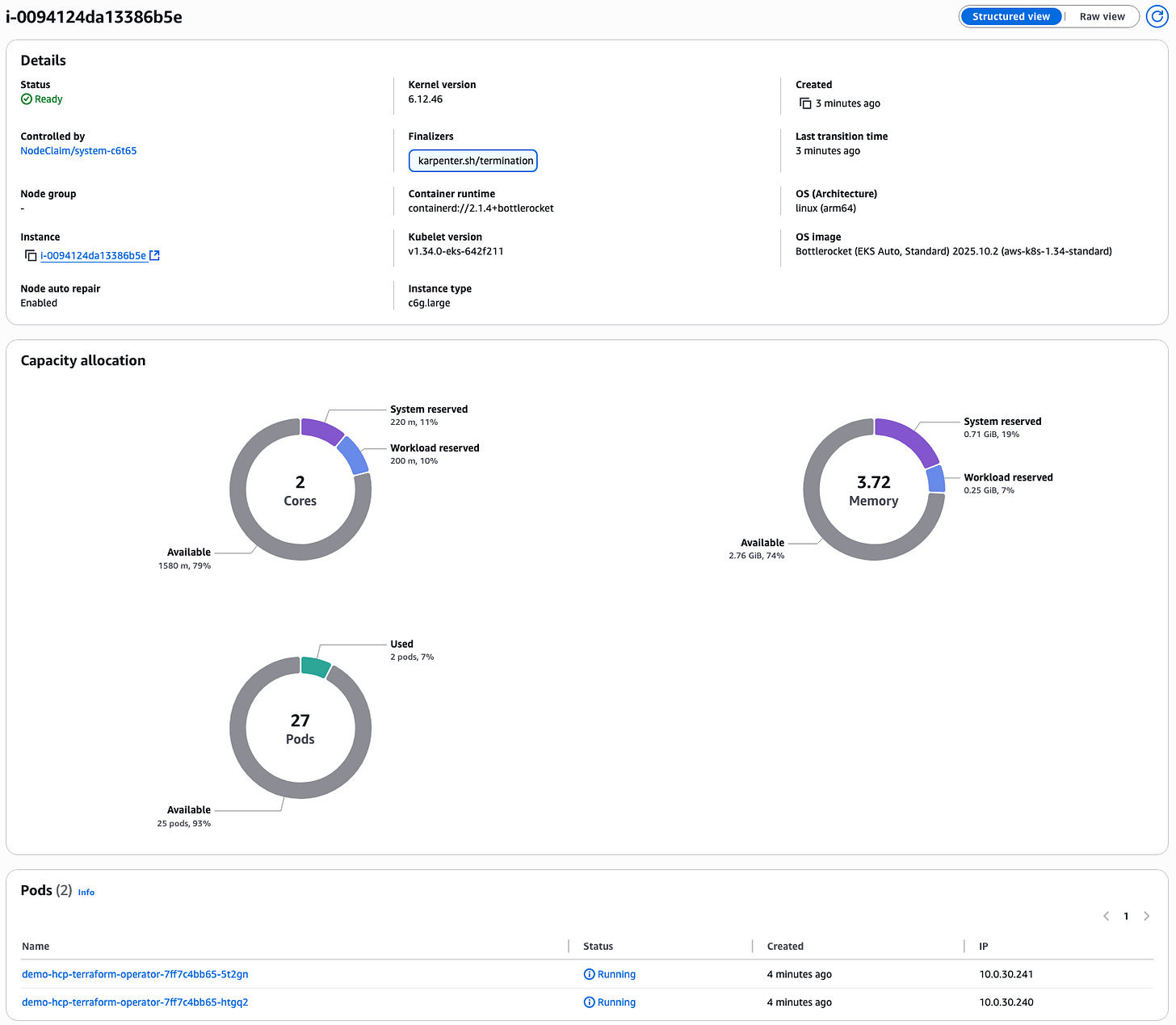

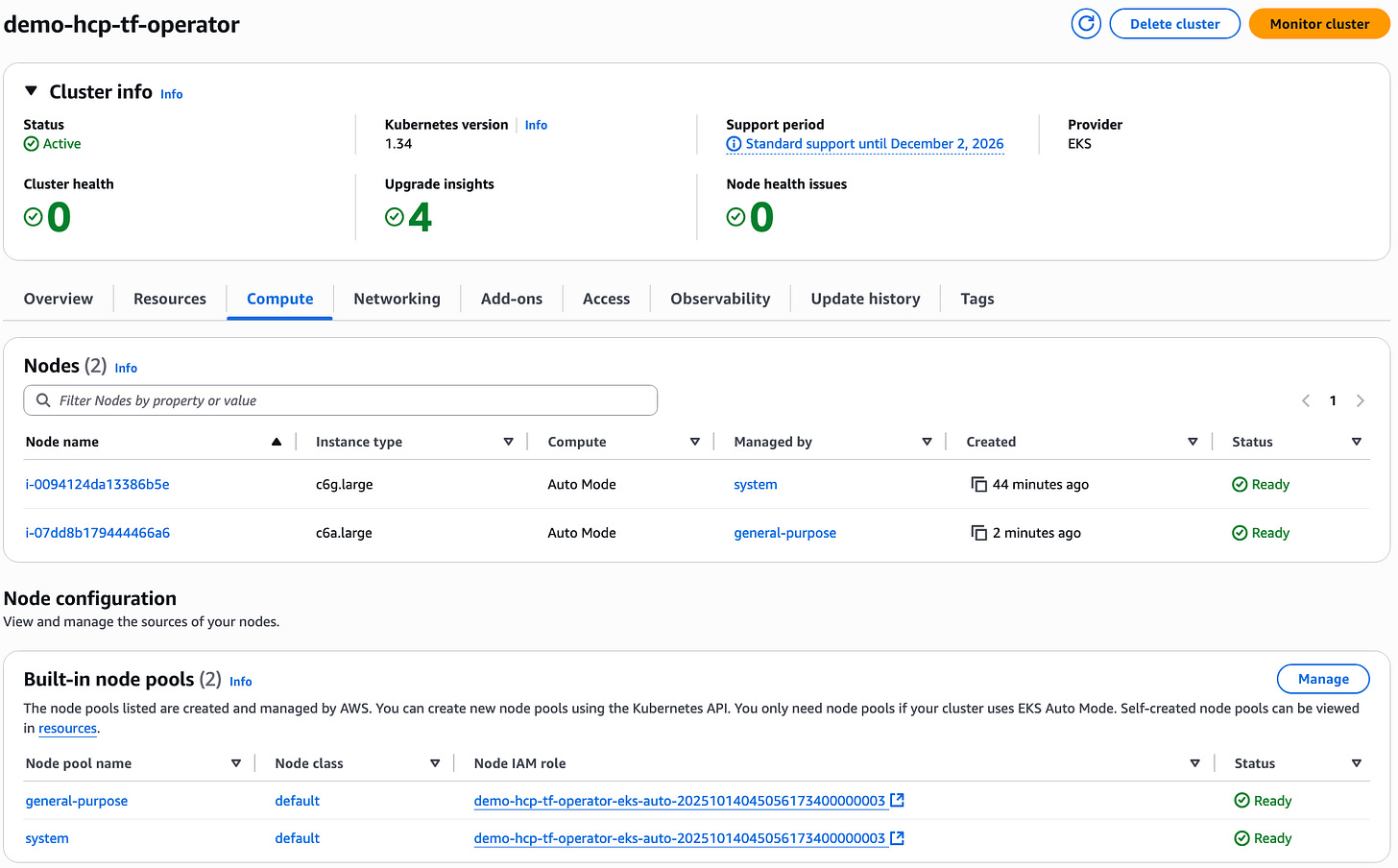

A node is automatically provisioned by the EKS cluster as the hosting environment for the pods. This node is managed by the system built-in node pool. Refer to the Amazon EKS documentation for running critical add-ons on dedicated instances for more information about scheduling pods onto the system node pool.

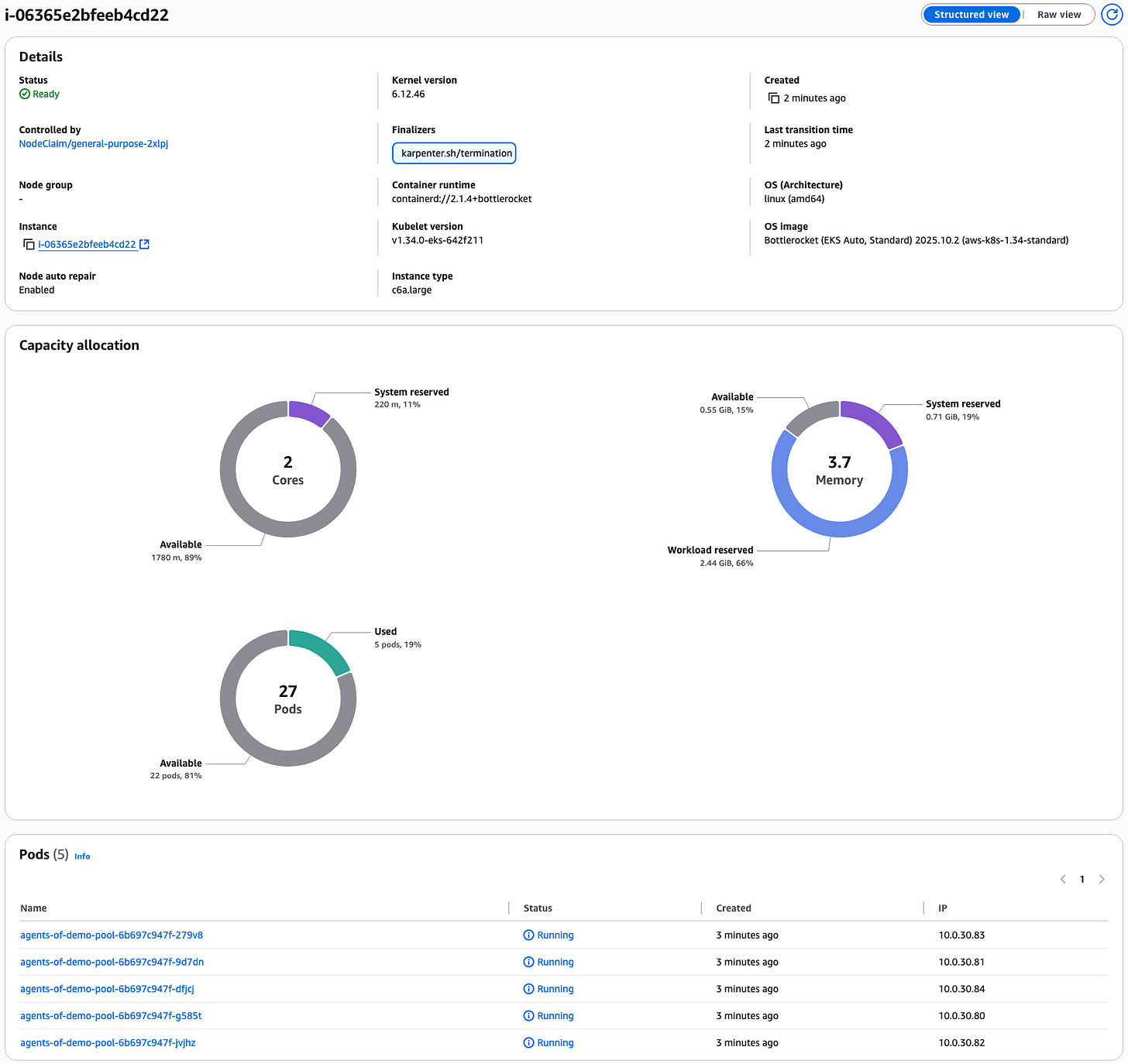

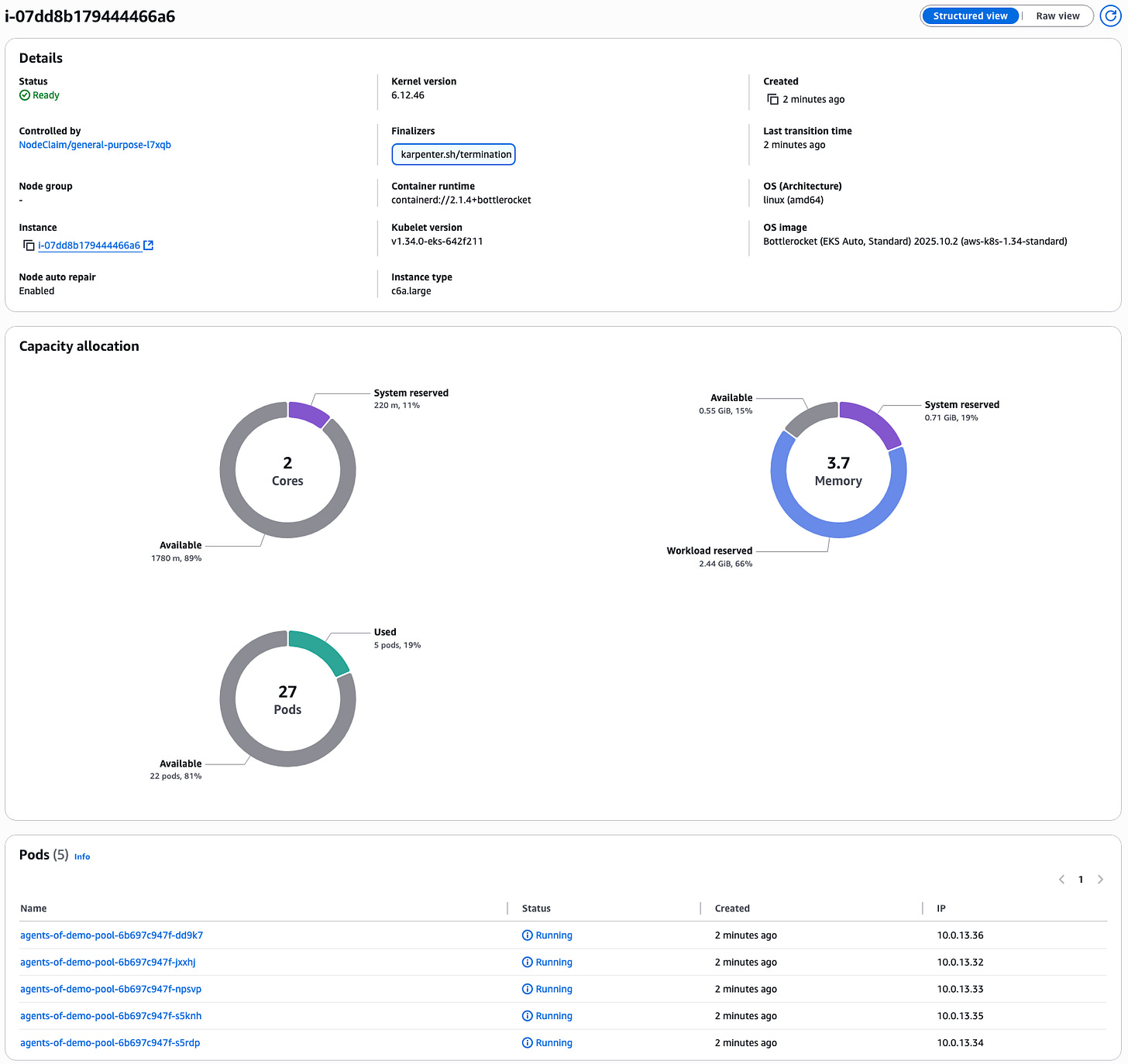

Viewing the node capacity allocation shows the 2 pods and their memory reservation.

Create the Terraform Agent Pool CRD

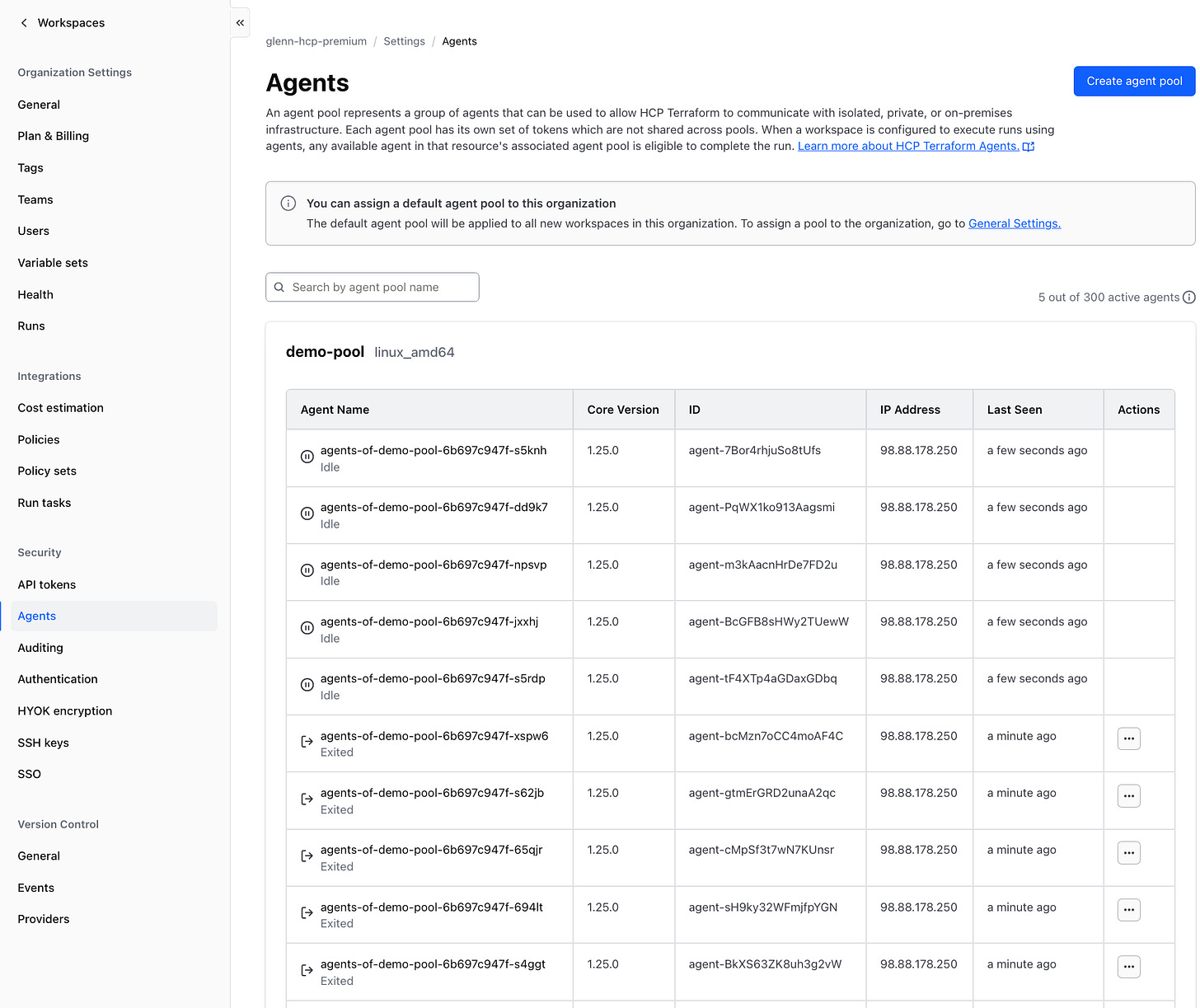

The code for this section is available here. Follow the instructions to create the operator agent pool CRD. For this demo, the Terraform agent pool is configured with minReplicas as 5 and maxReplicas as 50. These settings are compatible with agents running on HCP Terraform Premium, which supports up to 300 self-hosted agents. If you are using HCP Terraform Essentials or Standard, you should modify these values to align with your plan’s agent limits.

Note that beyond autoscaling capabilities, the operator also automatically configures agent pods with a default 15-minute graceful termination period, allowing active Terraform runs to complete before the agents are shut down. This prevents interruption of long-running infrastructure operations and ensures data integrity. You can customize this termination grace period by setting terminationGracePeriodSeconds in your AgentPool specification if your typical Terraform runs require more or less time to complete safely.

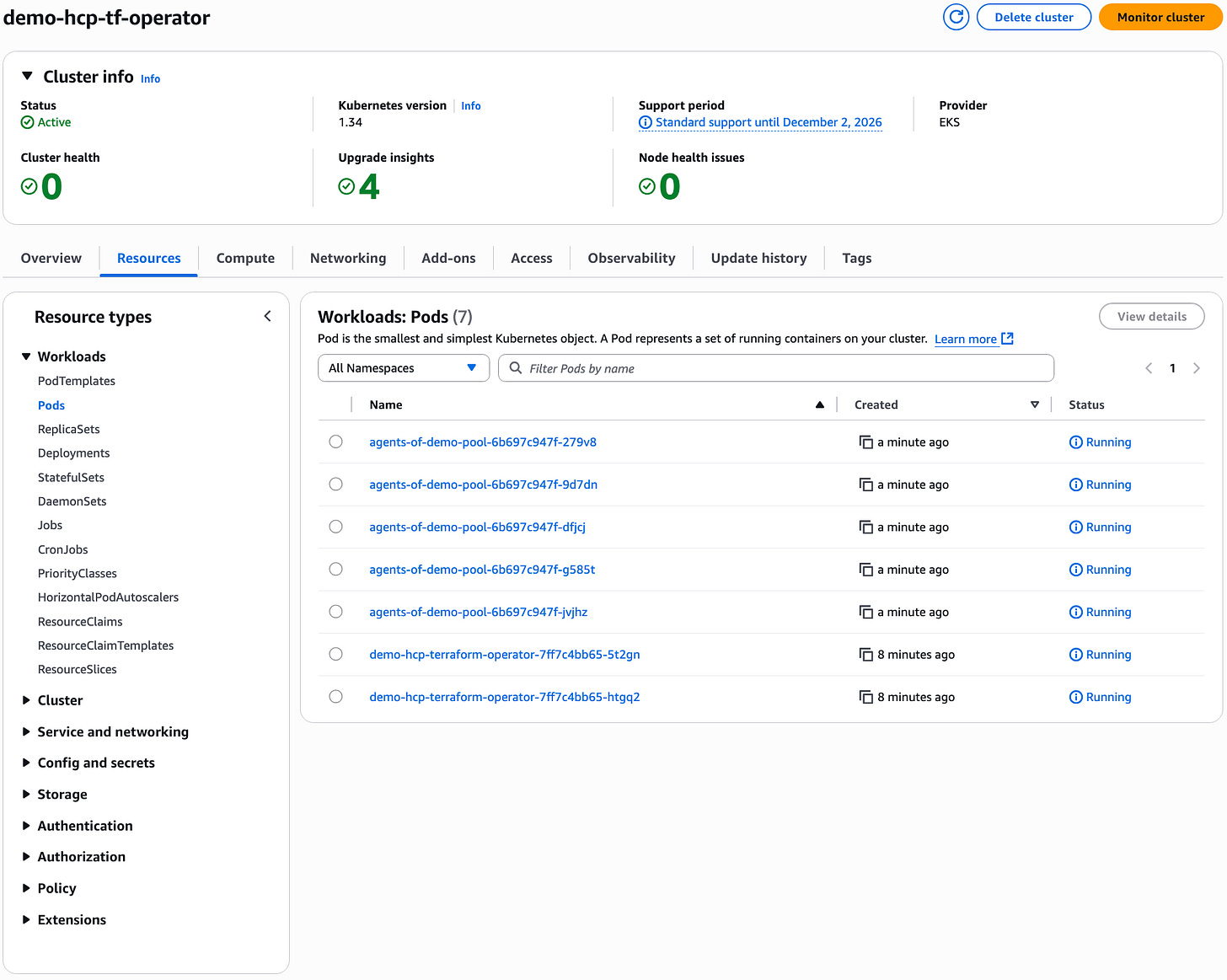

5 Terraform agent pods prefixed with agents-of-demo-pool are created in the EKS cluster. This is in addition to the original 2 HCP Terraform operator pods prefixed with demo-hcp-terraform-operator.

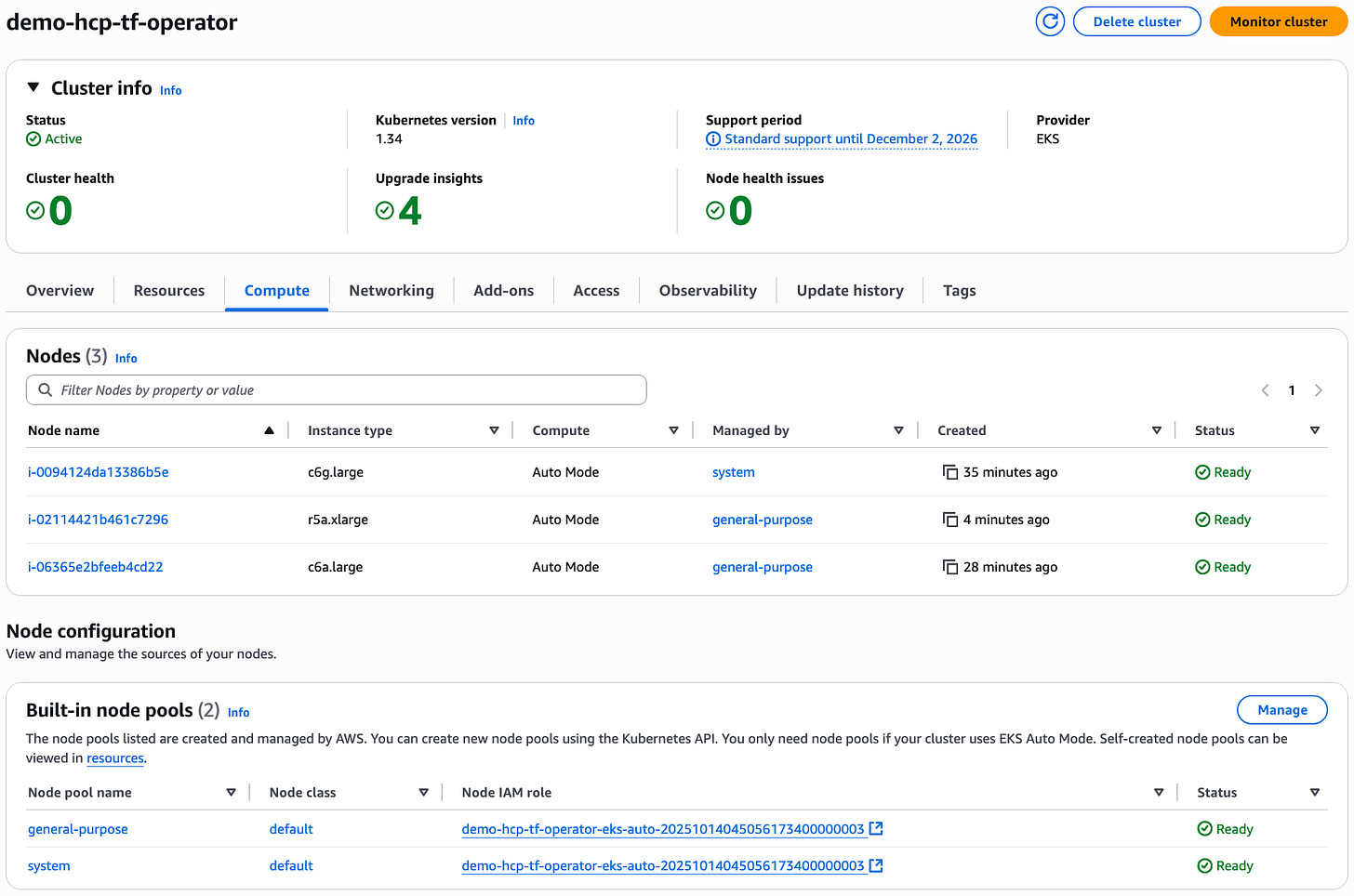

When the agent pods are created, EKS Auto Mode provisions a new node within the cluster to accommodate them, as the Terraform agent pods are scheduled onto the general-purpose built-in node pool.

Viewing the node capacity allocation shows the 5 Terraform agent pods and their memory reservation.

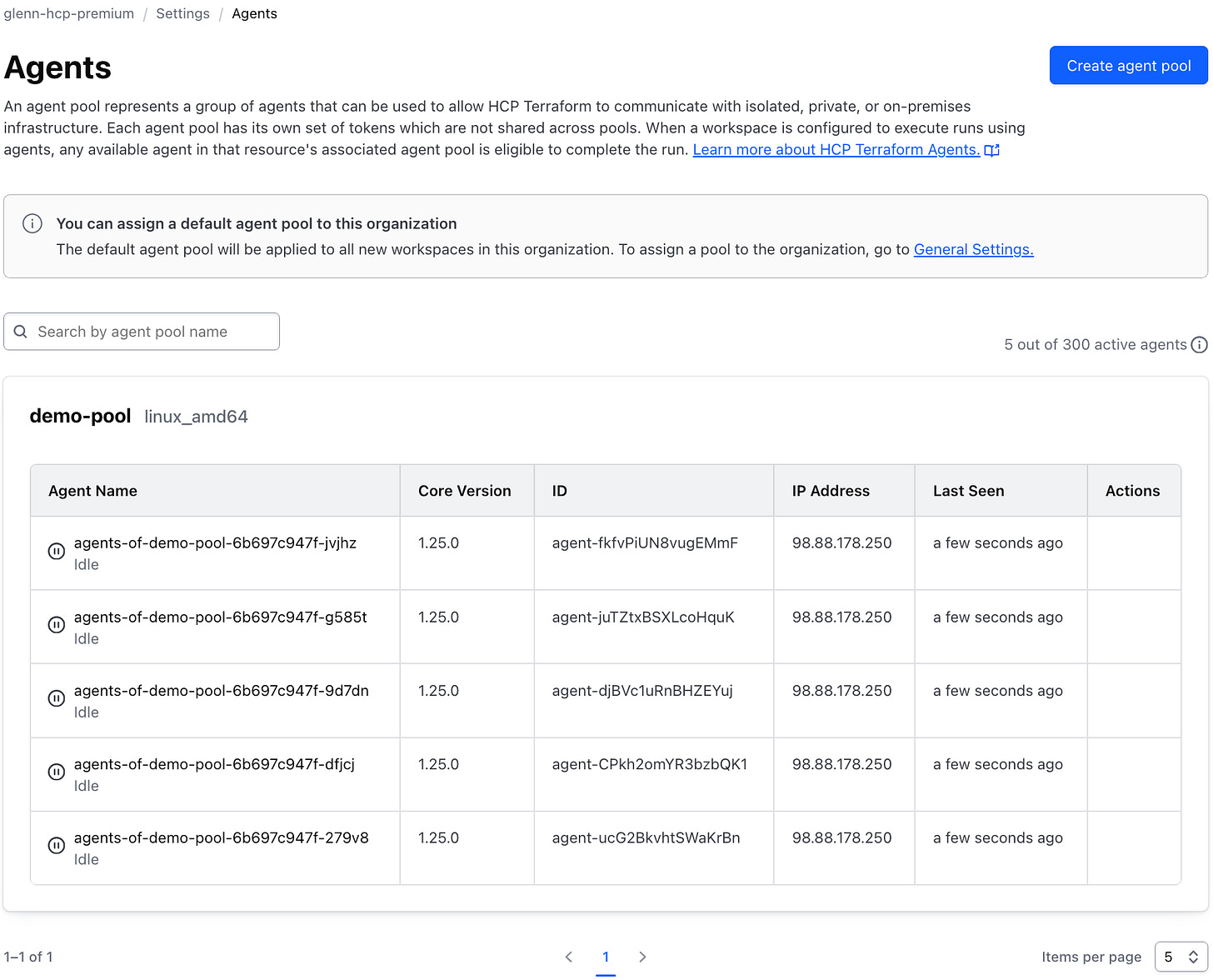

A Terraform agent pool is also created with the 5 agents registered and in Idle state.

Testing with workspaces

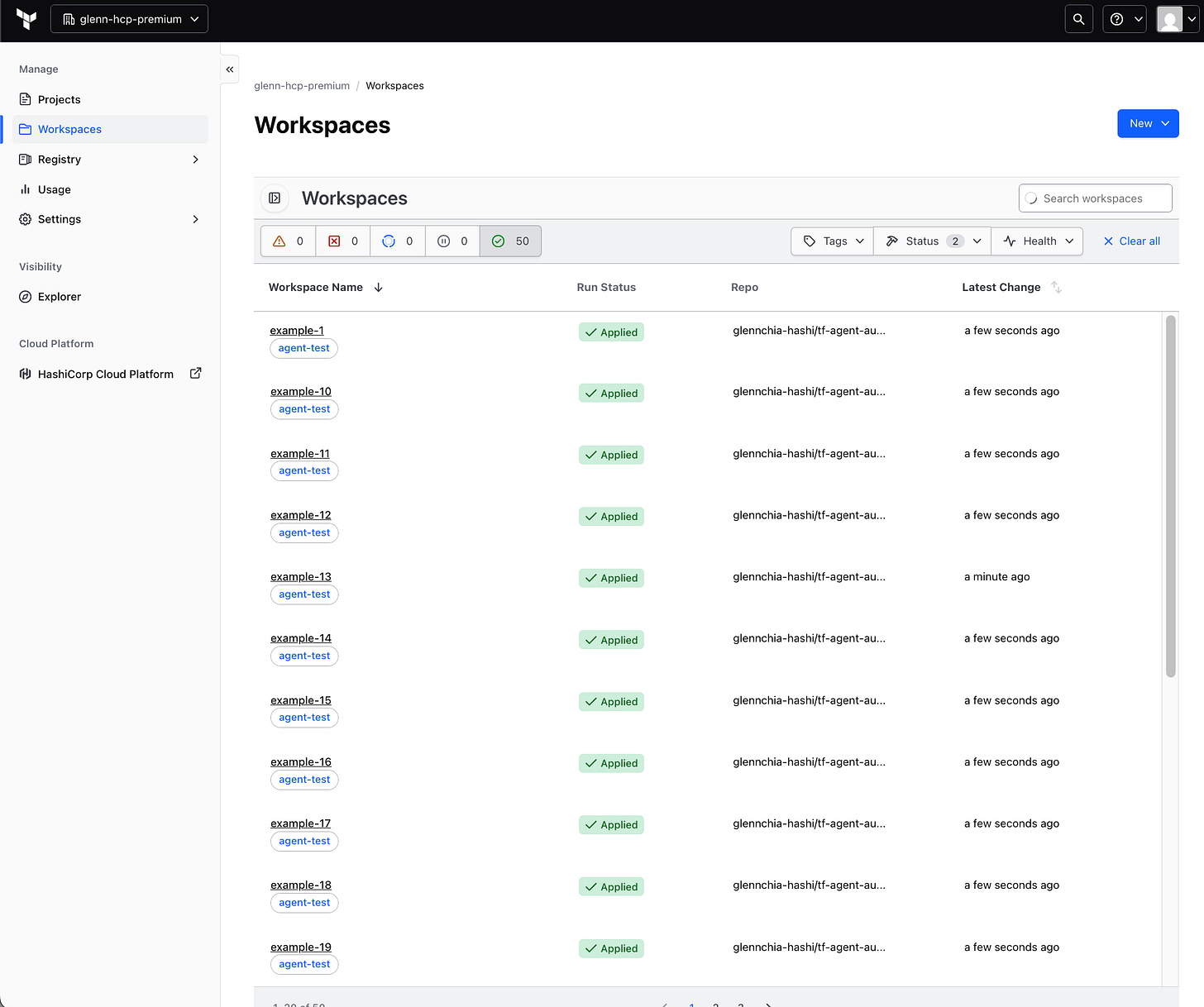

With both the operator and agent pool successfully deployed into the environment, the next step involves testing how the Terraform Cloud agents scale out and in based on workload. The test setup, available here, creates a GitHub repository containing simple Terraform configuration files and automatically provisions 50 Terraform workspaces. Each workspace is connected to this repository through VCS integration, enabling automatic plan and apply operations when code changes are pushed. All workspaces are specifically configured to use agent execution mode and are linked to the agent pool previously created using the Terraform agent pool CRD.

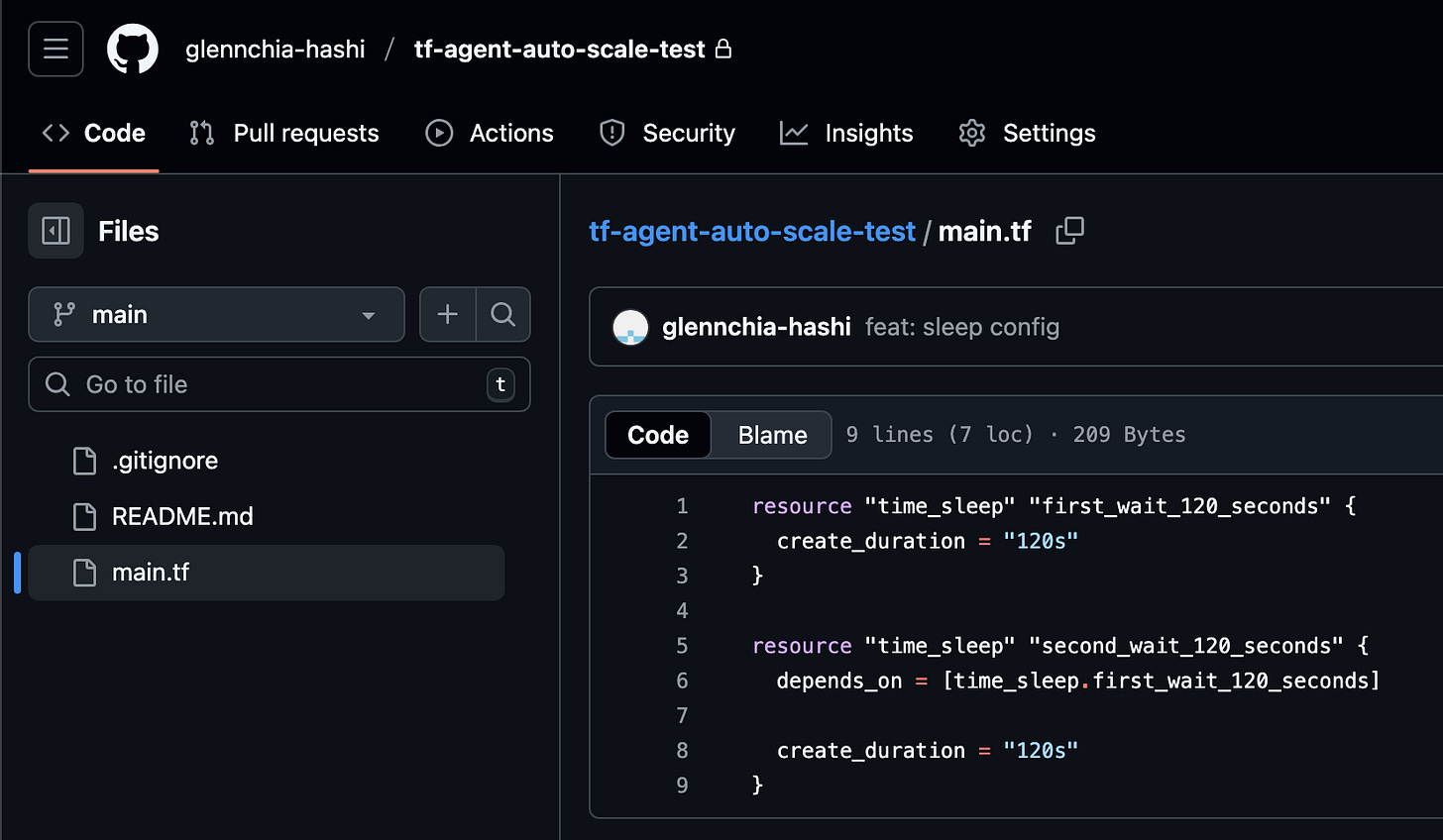

GitHub repo and workspaces

GitHub repository created with simple Terraform resources.

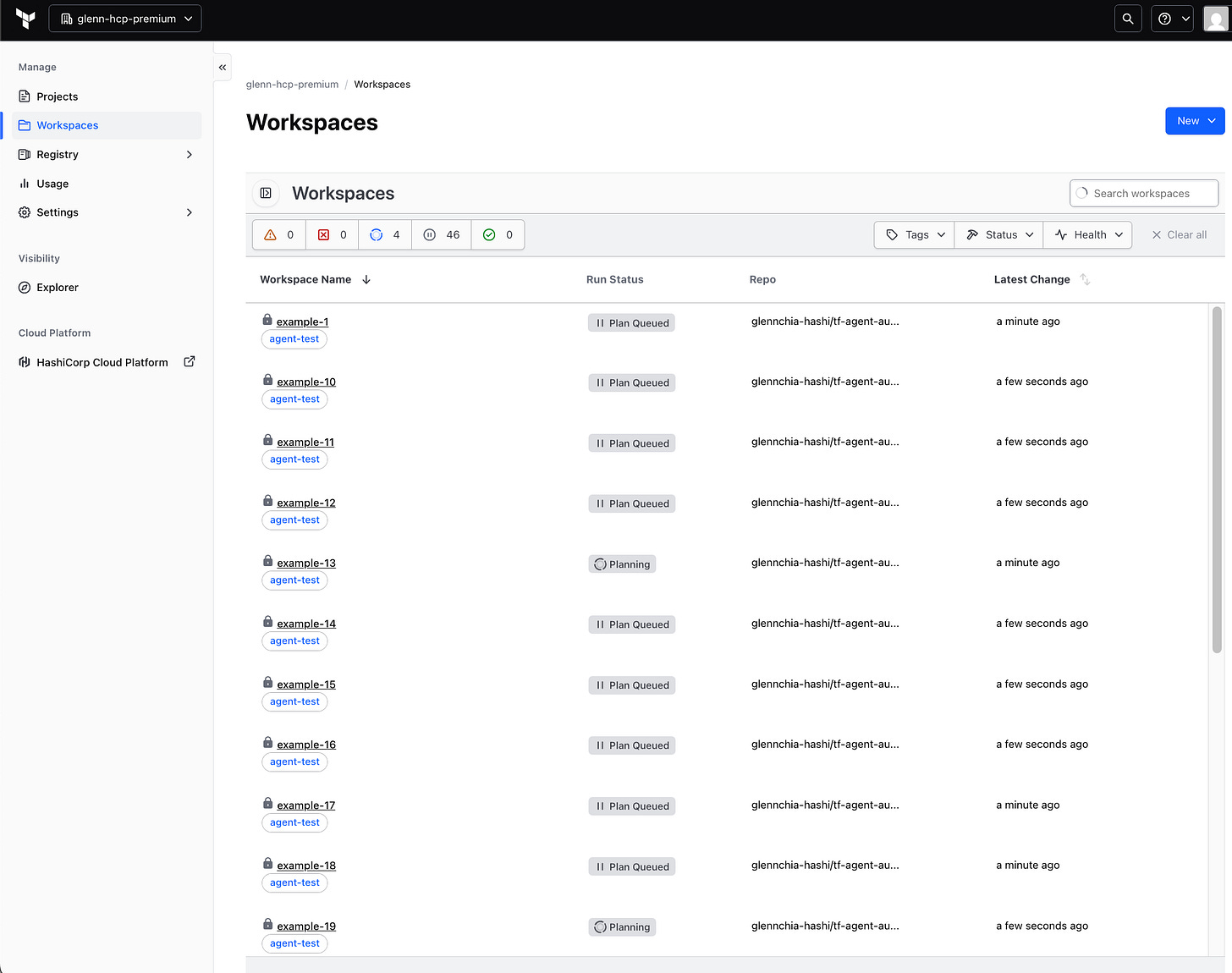

50 Terraform workspaces are created. Some workspace runs are picked up by the 5 pre-existing idle agents. The rest of the workspaces are queued while the operator detects that workspaces are pending and begins to scale out the Terraform agents. The logic for how the agent pool controller scales is available in the hcp-terraform-operator GitHub repo.

Terraform Operator scaling out

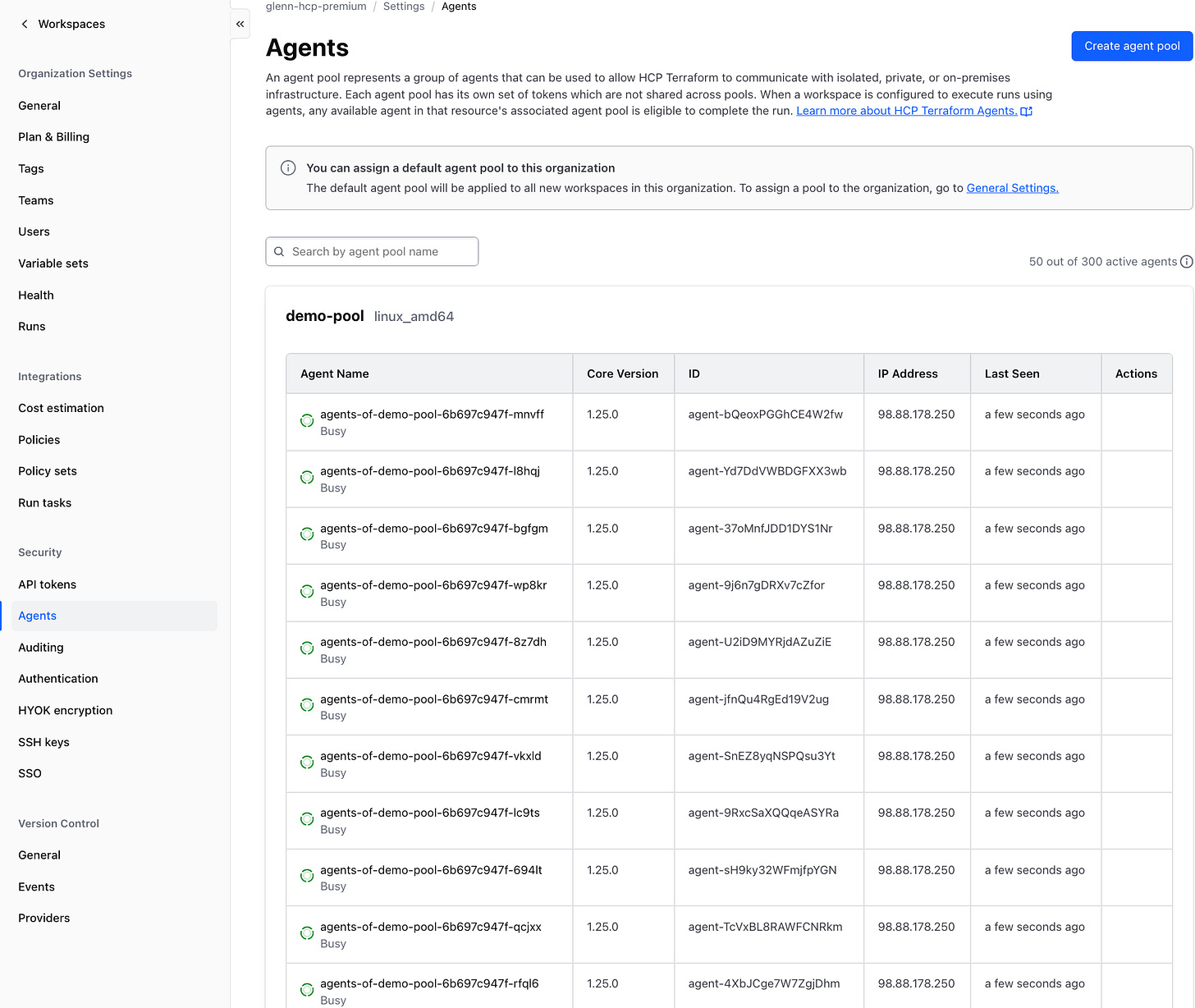

The controller detects that there are pending workspace runs and scales out the number of agents. At this point, 50 agents match the maxReplicas argument that was specified in the Terraform Operator Agent Pool configuration.

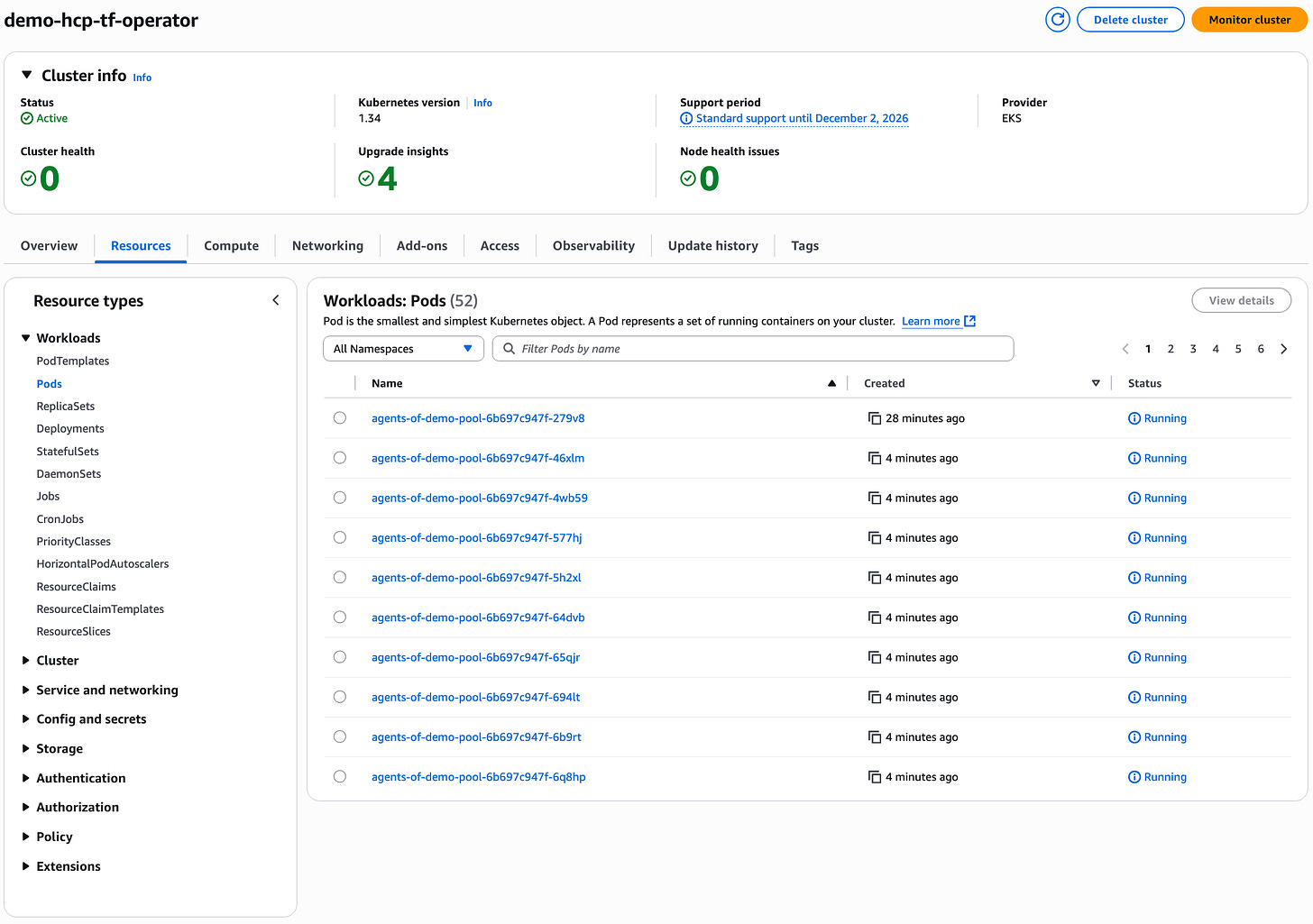

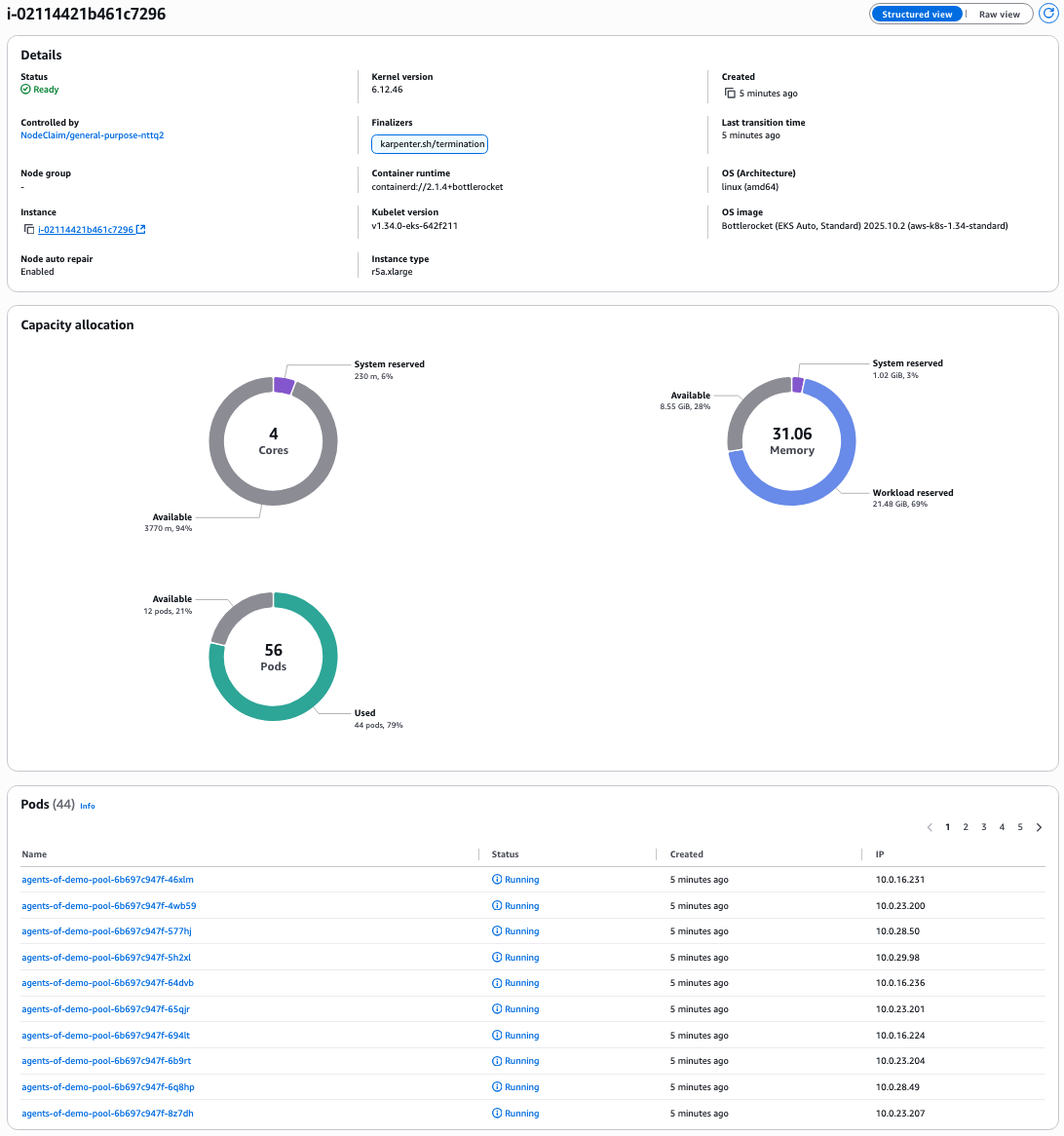

EKS Cluster has 52 pods running. There are 2 pods for the Terraform Operator and 50 pods for the Terraform agent.

Karpenter automatically scales out from 1 to 2 nodes that are managed by the general-purpose node pool to accommodate the large increase in Terraform agent pods.

44 of the new Terraform agent pods are scheduled on the newly launched r5a.xlarge node.

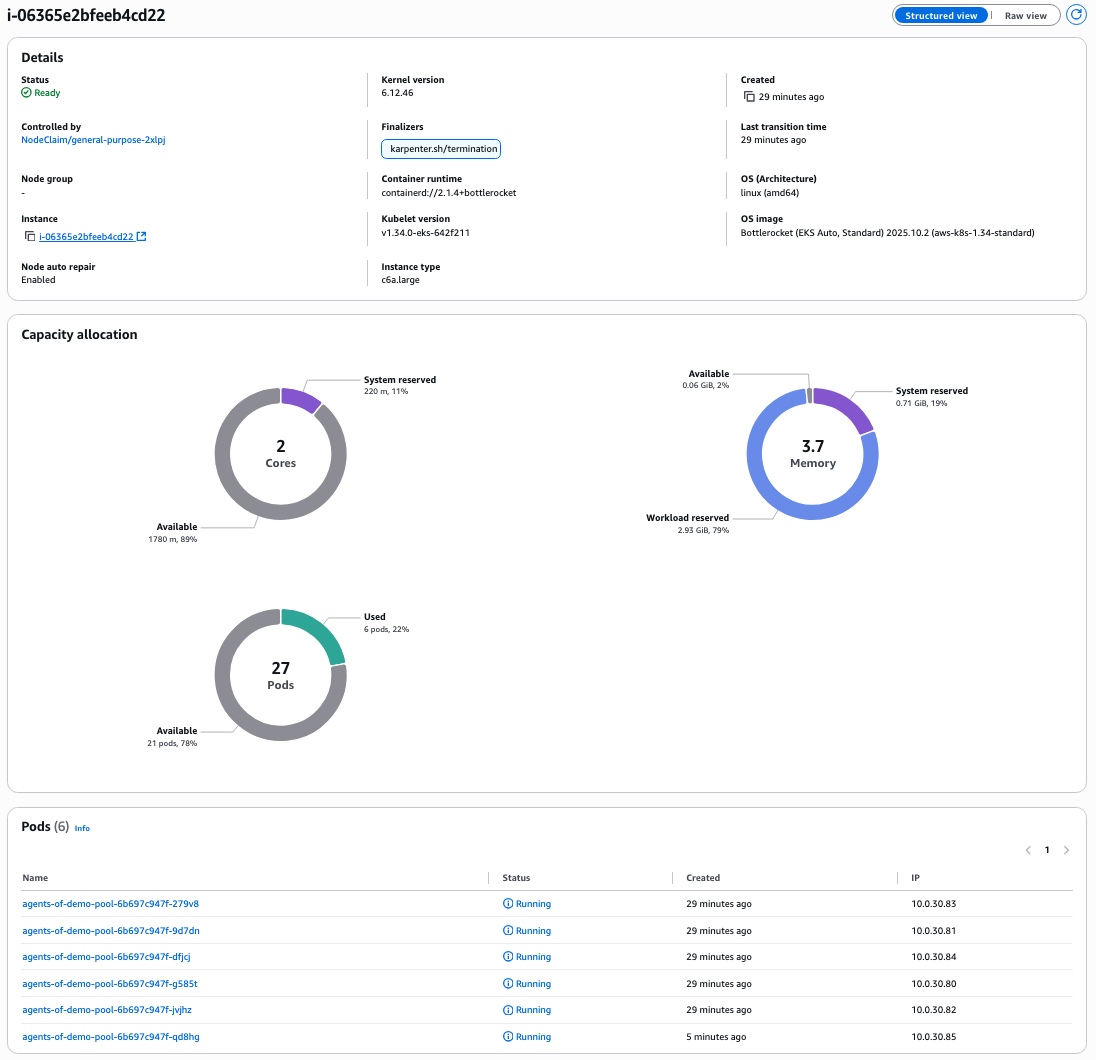

The original node has the original 5 Terraform agent pods and 1 new Terraform agent pod.

Terraform Operator scaling in

Eventually, all the workspaces are applied.

Terraform agents scale to 5 agents. This matches the minReplicas parameter that was specified in the Terraform Operator Agent Pool configuration.

EKS cluster shows that the 5 Terraform agent pods and 2 Terraform Operator pods are running.

After some time with no pending workspace runs or unscheduled pods, Karpenter automatically consolidates resources by scaling in the the number of nodes.

The remaining general-purpose node now shows only 5 Terraform agent pods.

Next steps

The combination of EKS Auto Mode and the HCP Terraform Operator creates a powerful solution for dynamically scaling Terraform agents. This approach delivers significant benefits for platform teams managing infrastructure at scale, from operational efficiency and streamlined workflows to enhanced security and consistent performance. By automating the provisioning, scaling, and lifecycle management of agent infrastructure, teams can focus on strategic infrastructure delivery rather than managing the underlying components.

As demonstrated in this blog, the solution automatically adapts to changing workload demands, spinning up additional capacity when Terraform runs increase and consolidating resources when demand decreases. This ensures your organization always has the right amount of capacity for your Terraform operations without manual intervention.

Get started with Terraform agents on EKS Auto Mode by following these additional resources:

Try the HCP Terraform Agent tutorial to learn more about agent capabilities

Note that EKS Auto Mode prices are in addition to the Amazon EC2 instance price, which covers the EC2 instances themselves. Like EC2 instance charges, EKS Auto Mode charges are billed per second, with a one-minute minimum. Refer to the EKS pricing page for more information.